IT specialists and admins usually optimize network performances and ensure a seamless information flow. However, knowing the nuances of network throughput and latency is crucial for anyone who wishes to gain valuable insights into network infrastructure. Therefore, this article brings significant data on throughput vs. latency to implement strategic optimization and streamline organizational operations.

What is Latency in Networking?

When a user browses something online or checks an email, data is transferred back and forth among server applications and computers. In this context, latency is the time of request to travel from the sender to the receiver and vice versa. Simply put, it is the time a network takes to send a request from a browser to proceed and return.

Network latency also represents network lag in communications, where a longer delay means high latency and a faster response means low latency. Before delving into the difference between latency and throughput, learn the basics of throughput in the next section.

What is Throughput in Networking?

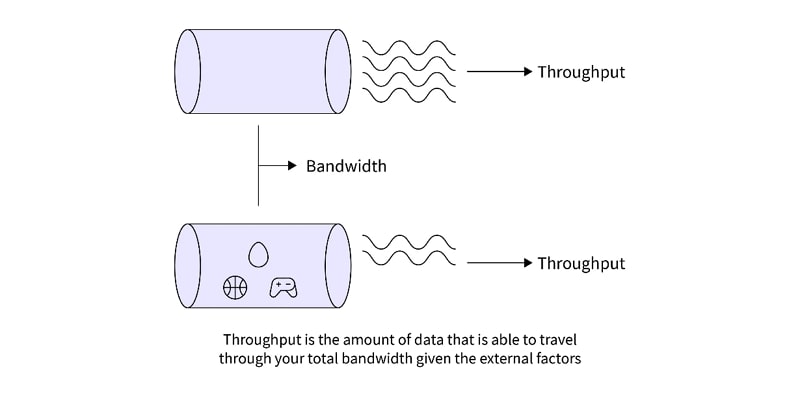

Network throughput shows the sum of data successfully transferred from source to destination within a specific timeframe. In other terms, it gauges the amount of data sent from one destination to another, and the usual units are bits or bytes per second. This critical performance metric can measure the capacity and efficiency of a network infrastructure.

You can imagine throughput as a highway during rush hour, where the number of cars passing through the roads represents this term. Usually, throughput value depends upon factors such as available bandwidth, signal-to-noise ratio, and hardware capabilities.

Why are Throughput and Latency Important?

Throughput and latency are critical performance metrics in various network systems, such as software applications, databases, and computer networks. For better user experience and performance, you must learn about their importance, which is mentioned below separately:

Importance of Latency

- Responsiveness: Firstly, low latency is vital in applications to increase their responsiveness for video conferencing, online gaming, and other tasks.

- System Performance: Latency is an essential factor to consider while maintaining synchronization and consistency between different components in distributed systems.

- User Experience: In web applications and online servers, low latency elevates user experience by providing a faster response time.

Importance of Throughput

- Scalability: For scalability, systems with higher throughput are integral because they can handle the increased workload without compromising performance.

- Capacity Utilization: Furthermore, higher throughout ensures your system resources are used efficiently, leading to better infrastructure investment.

- Efficiency: With high throughput value, systems process your commands quickly with reduced wait time, which leads to efficient operations.

How to Measure Latency and Throughput?

Measuring latency and throughput effectively can provide insights into the performance of network connections, services, and applications. Here’s how you can measure each:

Measuring Latency

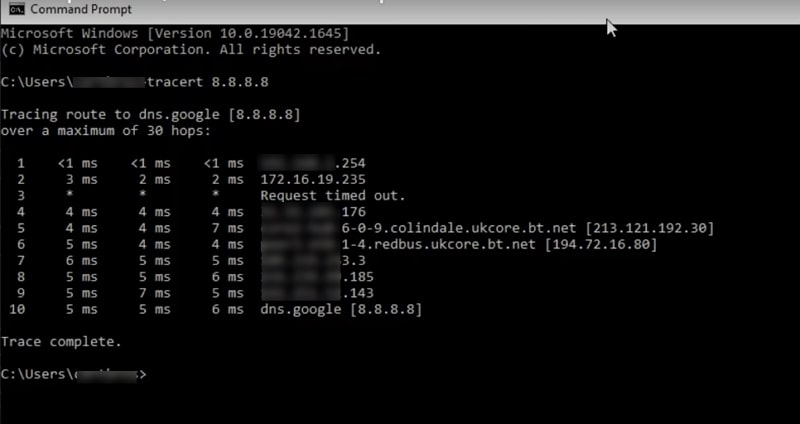

When talking about latency, this factor measures the time taken for a data packet to travel from the source to the destination. To measure this network delay parameter, users can utilize tools like ping or traceroute. These tools send packets to a destination and record response retrieval time, providing an average latency value. Moreover, this value is affected by two factors, which are explained below:

- Time to First Byte: This factor shows the time taken by the first byte of any file to reach the browser of users once a secure connection is established. Hence, the lower values of this parameter will ensure that your latency rate is minimal for a smooth networking experience.

- Round Trip Time: Other than the previous factor, the latency rate also depends on the duration required for a browser to send a query and receive a relevant response from a server. Therefore, RTT or Round Trip Time is one of the most widely used metrics for assessing network latency, measured in milliseconds.

Calculating Throughput

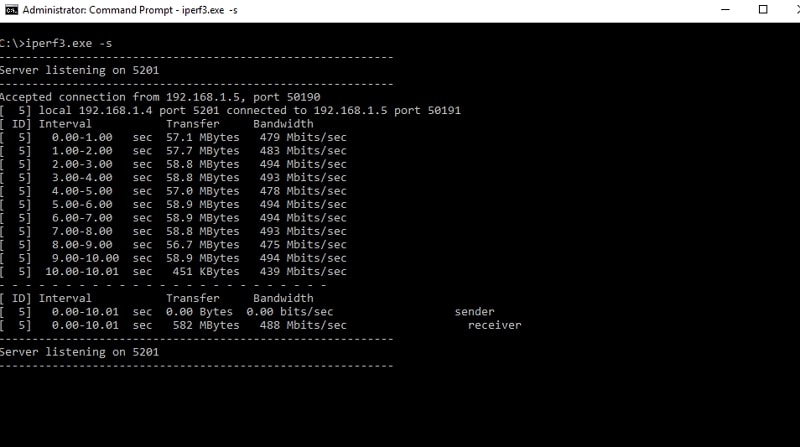

When comparing throughput vs. latency, throughput measures the amount of transferrable data from one point to another in a given amount of time. Usually, this parameter is measured in bits per second (bps) or higher units like Mbps or Gbps. To measure throughput, there are many tools available, which are discussed below briefly:

- iPerf/iPerf3: With its open-source network testing system, this tool can generate traffic and measure maximum network throughput.

- Speedtest.net: Using this popular speed-testing tool, users can measure downstream and upstream throughput between their device and the internet.

Differences Between Latency and Throughput

As discussed throughout this article, both these parameters may appear the same, but they have some differences. Upon going through the above sections, you must have realized some of the distinguishing factors. However, you should go through this detailed comparison table to get a complete idea of the differences between latency and throughput:

| Metrics | Latency | Throughput |

|---|---|---|

| Definition | Generally, we define latency as the time it takes for a data packet to travel from source to destination. | On the other hand, this parameter displays the amount of data transmitted over a network in a given time. |

| Measuring Unit | Typically, it is measured in milliseconds. | Alternatively, we measure this factor in bits per second, kilobits per second, and Mbps. |

| Focus | When comparing throughput and latency, the latter signifies a delay in data transfer. | Throughput represents the volume of data transfer. |

| Impact on Network | You can experience slow and interrupted networks due to high latency rates. | Comparatively, the lower values of throughput can lead to slow data-transferring tasks. |

| Importance | Used in real-time applications, such as VoIP, online gaming, and video conferencing. | When comparing throughput vs. latency, the former is essential for high-volume data transfer applications, such as file downloads. |

| Example Scenario | The time it takes for a web page to start loading after a user clicks a link. | The total amount of data that users can download in a second. |

Relationship Between Bandwidth, Latency, and Throughput

Once you go through this article, you will be able to understand the difference between latency and throughput. However, there is a third parameter representing the maximum value of the data transfer rate, which we know as bandwidth. When discussing a connection between all these parameters, we can say that bandwidth constraints throughput. Even if the network has high bandwidth values, the actual throughput may be lower due to factors like network congestion.

So, we can say that throughput is always less than bandwidth when developing a relationship between these parameters. Other than that, latency and throughput are also related, as higher latency values can significantly affect the amount of data transferred per second. Moreover, we can also draw a relationship between latency and bandwidth through a parameter known as Bandwidth-Delay Product. You can easily calculate the value of this parameter using the formula given below.

BDP = Bandwidth × Latency

When discussing the implications of these relationships, high bandwidth and low latency networks are ideal for applications with high throughput. Among this application, we can include services such as streaming, online gaming, and communication platforms.

How Does ZEGOCLOUD Work With Throughput and Latency?

ZEGOCLOUD is a real-time communication service provider that offers various products for video calls and audio calls, live streaming, and other communication solutions.

When dealing with throughput and latency, here’s how ZEGOCLOUD typically addresses these aspects:

Throughput:

- Adaptive Bitrate Streaming: ZEGOCLOUD utilizes adaptive bitrate streaming to adjust the video quality based on the user’s current network conditions. This helps in maximizing the throughput and ensuring the best possible video and audio quality during calls and live streams.

- Efficient Codec Usage: The use of advanced codecs, such as H.264 and VP8, helps in optimizing data transmission, allowing for higher throughput even under limited bandwidth conditions.

Latency:

- Low Latency Protocols: ZEGOCLOUD employs protocols designed to reduce latency, essential for real-time communication applications. The use of WebRTC (Web Real-Time Communication) is a common approach, which is well-regarded for its low latency characteristics.

- Server and Network Optimization: By strategically placing servers around the globe and optimizing routing paths, ZEGOCLOUD can significantly reduce latency, making the communication as real-time as possible.

- Edge Computing: Utilizing edge computing solutions to process data closer to the end-user also helps in reducing the latency further.

In addition, ZEGOCLOUD likely employs various other technologies and methods to handle issues related to network fluctuations, such as packet loss, jitter, and variable network speeds, all of which can affect throughput and latency. These might include techniques like packet retransmission, jitter buffering, and error correction codes.

For the most accurate and detailed explanation of how ZEGOCLOUD manages throughput and latency specifically for their services, checking their official documentation or contacting their support team would provide the best insights.

Conclusion

Throughout this article, we have discussed the concept of latency vs. bandwidth vs. throughput to help you understand their differences and relationships. All these parameters play a great role in providing a high network quality for optimized user experience, free from any delay and jitters. ZEGOCLOUD can help you in this regard with its advanced APIs by assisting you in building low-latency and high-bandwidth applications.

Read more:

Let’s Build APP Together

Start building with real-time video, voice & chat SDK for apps today!